What Enterprise Buyers Really Expect from AI Products and How to Win Them

What enterprise buyers actually require before they trust AI to act autonomously

👋 Hi, it’s Gaurav and Kunal, and welcome to the Insider Growth Group newsletter, our bi-weekly deep dive into the hidden playbooks behind tech’s fastest-growing companies.

Our mission is simple: We help you create a roadmap that boosts your key metrics, whether you're launching a product from scratch or scaling an existing one.

What We Stand For

Actionable Insights: Our content is a no-fluff, practical blueprint you can implement today, featuring real-world examples of what works—and what doesn’t.

Vetted Expertise: We rely on insights from seasoned professionals who truly understand what it takes to scale a business.

Community Learning: Join our network of builders, sharers, and doers to exchange experiences, compare growth tactics, and level up together.

A pattern we keep seeing with AI companies is that things fall apart after the demo.

The product looks impressive in a controlled environment. The workflows are clean. The results feel obvious. But once the product hits real enterprise systems, real data, and real constraints, implementation slows down or stalls entirely. Integrations become brittle. Trust starts to erode. ROI gets fuzzy. Deals quietly die.

Enterprise buyers are tired of this.

There’s a real sense of AI fatigue right now. Buyers aren’t saying no to AI - they’re saying no to products that look promising but can’t survive real-world deployment. If an AI product can’t clearly own a specific P&L outcome - reducing cost, increasing revenue, or removing operational overhead it doesn’t make it past procurement.

We see this pattern over and over again. And in this post, we will break down what actually separates AI agents that demo well from those that are truly ready to be sold and scaled inside the enterprise.

If you’re building or selling AI products into enterprises, understanding this shift is critical. Enterprise buyer is rarely an individual but rather involves a group of stakeholders like business owner, IT, legal, procurement and finance. The primary contact may be leaders like CMO, CIO, COO based on whether the organization needs to grow the business or optimize costs.They like to evaluate products as investments which lead to improved business outcomes.

This article is for founders, product leaders, and GTM teams selling AI into enterprise who want to understand what has changed and how to build and sell AI products that actually win.

Introducing Manoj Kokal:

Manoj is a Director of Product at Automation Anywhere, where he leads the Agentic AI product focused on ITSM automation. Dynamic product leader with over two decades of experience in product strategy and leadership. Over the past eight years, he has specialized in building enterprise AI products, significantly increasing revenue for each of the products he’s owned and taking them from $5M to $30M in ARR.

A few terms we’ll use throughout this post

Before we go deeper, here are a few concepts that come up repeatedly. If you already know them, feel free to skim.

ITSM (IT Service Management)

How large organizations manage internal IT issues like password resets, software access, device problems, and outages at scale. Think of ITSM as the workflows and systems behind “my laptop isn’t working” inside an enterprise.

Agentic AI

AI systems that don’t just provide answers, but can make decisions and take action across workflows often autonomously and within defined guardrails.

System of Record

The authoritative system where data lives and actions must ultimately be written back (e.g., ServiceNow for IT, Salesforce for sales, ERP systems for finance).

Guardrails

Rules, thresholds, and controls that define what an AI agent can and cannot do when to act autonomously, when to escalate, and how risk is managed.

AI Observability

The ability to see how an AI system made a decision: what data it used, which rules were applied, when humans intervened, and how outcomes tie back to business metrics.

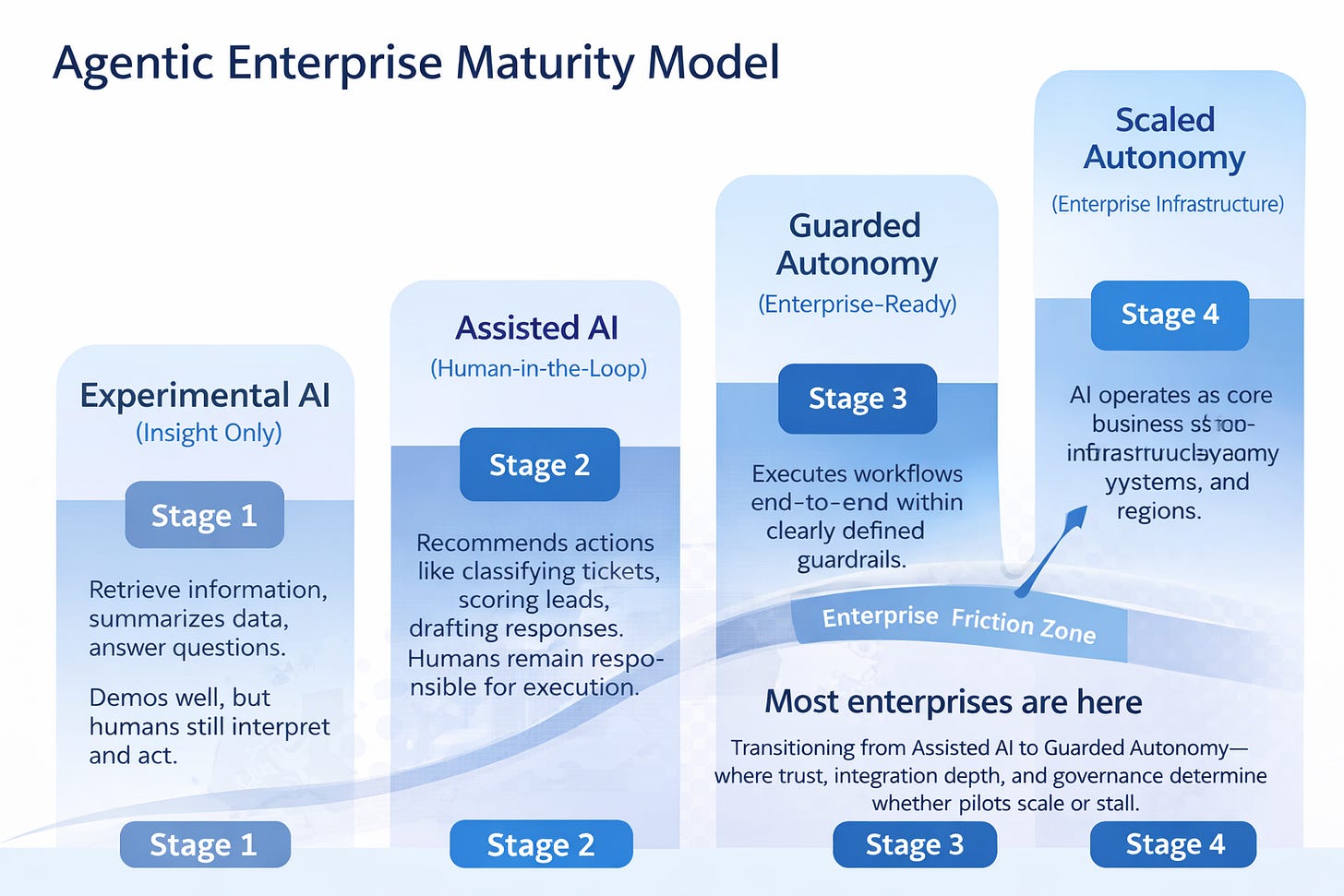

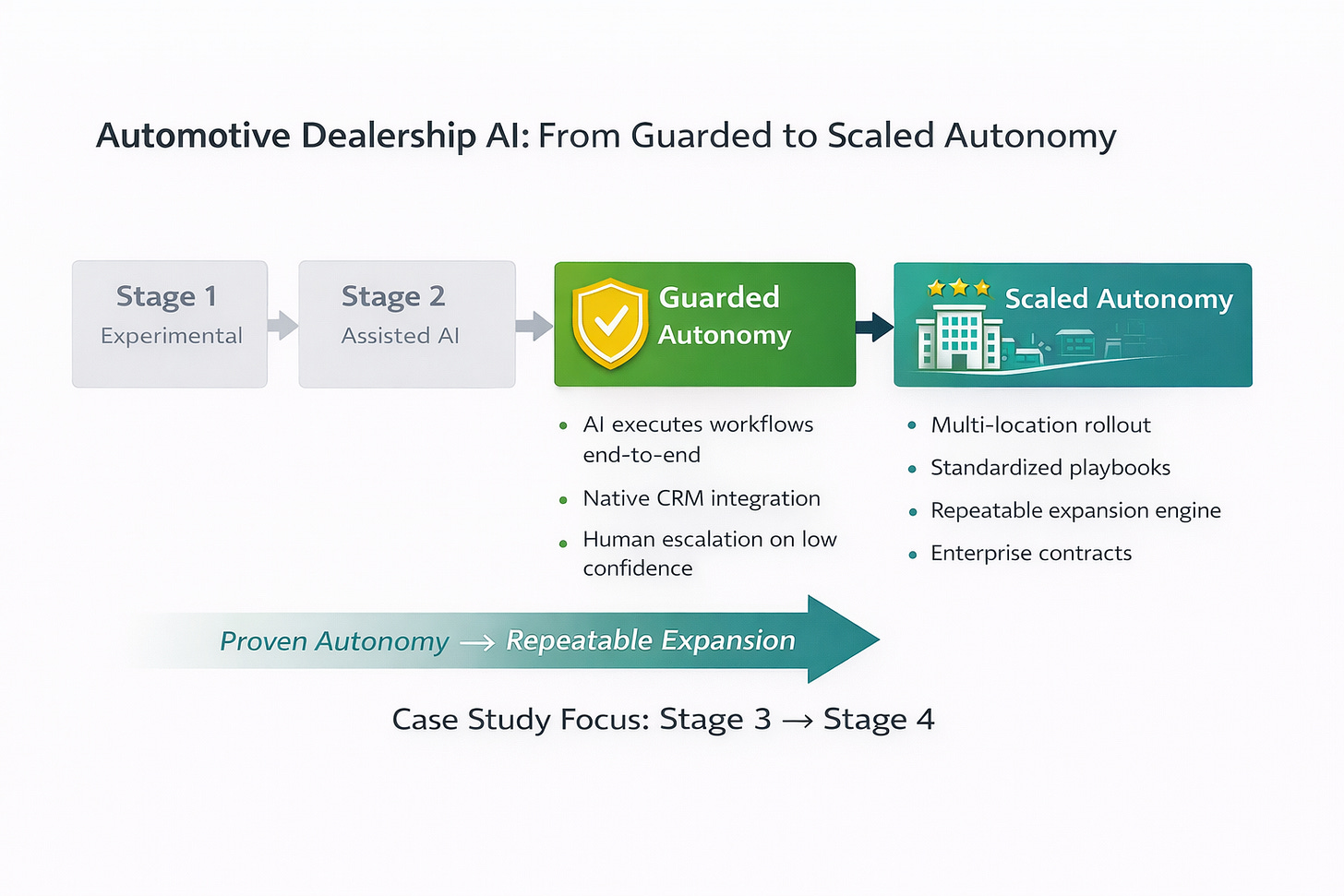

The Agentic AI Maturity Model for Enterprises:

Most enterprise AI journeys follow the same progression, even if teams don’t explicitly name it.

Early on, AI shows up as a feature. Then it becomes an assistant. Only later after trust, integration, and governance are earned does it become something enterprises are willing to rely on autonomously.

We’ve found it helpful to think about enterprise AI adoption across four stages:

Stage 1: Experimental AI (Insight Only)

AI is used to retrieve information, summarize data, or answer questions. These experiences often demo well, but they still rely on humans to interpret results and take action. Value is indirect and difficult to tie to a P&L line item.

Stage 2: Assisted AI (Human-in-the-Loop)

AI begins recommending actions - classifying tickets, scoring leads, drafting responses but humans remain in control of execution. This is where many enterprise pilots live today. Early ROI appears, but scaling is slow because people remain the bottleneck.

Stage 3: Guarded Autonomy (Enterprise-Ready)

AI starts executing workflows end-to-end within clearly defined guardrails. It integrates directly with systems of record, escalates only when confidence drops, and produces observable, auditable outcomes. This is the inflection point where pilots turn into rollouts.

Stage 4: Scaled Autonomy (Enterprise Infrastructure)

AI operates as part of core business infrastructure. Autonomy scales across teams, systems, and regions. Outcomes are measured, risk is managed, and expansion happens naturally.

Note: The majority of enterprises are transitioning between Stage 2 (Assisted AI) and Stage 3 (Guarded Autonomy).

They’ve moved beyond simple chatbots, but true end-to-end autonomy is still constrained by trust, integration depth, and governance requirements.

This gap between AI that assists and AI that can be trusted to act is where most enterprise deals stall. The rest of this article, and the case studies that follow, focus on how teams successfully cross that gap.

Why Stage 2 (”Assisted AI”) stalls in enterprise deals

Assisted AI Creates Insight, Not Outcomes

In Stage 2 (Assisted AI), systems analyze data and recommend actions, but humans still execute the work. This works in demos but breaks down in production. Enterprises don’t buy AI for better suggestions, they buy it to remove work entirely. Until AI can own an outcome end-to-end, value remains indirect and difficult to tie to a P&L line item.

Humans Remain the Bottleneck

Stage 2 (Assisted AI) AI still depends on human availability, consistency, and judgment. As volume increases, efficiency gains flatten because people - not systems - limit throughput. This is why many enterprise pilots show early promise but stall during expansion: AI speeds up decisions, but doesn’t eliminate operational drag.

ROI Is Hard to Defend at Scale

In Assisted AI deployments, ROI is fragmented - time saved here, productivity gained there. That’s hard for finance and procurement teams to defend during budget reviews and renewals. Stage 3 (Guarded Autonomy) changes this by producing measurable outcomes like higher auto-resolution rates, fewer escalations, and lower cost per transaction.

Trust Gaps Prevent Autonomy

Enterprises hesitate to grant autonomy without visible controls. Stage 2 (Assisted AI) systems often lack clear guardrails, auditability, and explainability, raising concerns about hallucinations, non-deterministic behavior, and security at scale. Without trust mechanisms built into the product, enterprises won’t allow AI to act independently.

How enterprises evaluate AI when moving to Stage 3 (Guarded Autonomy)

Understanding the evaluation criteria helps vendors position their solutions effectively:

Agentic Capability Is Now Table Stakes

Buyers look beyond chatbots and ask: Can the AI create workflows? Can it make decisions? Can it take actions in systems of record? Agentic capability - not just knowledge retrieval - is now a core evaluation criterion.

Increasingly sophisticated buyers also assess support for standard protocols like MCP (Model Context Protocol) or agent-to-agent communication, recognizing that flexibility to orchestrate agents across vendors may matter long-term.

Domain Expertise Dramatically Shortens Time to Value

One of the strongest differentiators today is domain specificity. Generic AI struggles in complex enterprise environments because it lacks the business context needed to operate effectively.

For example, an ITSM buyer will favor an AI product that understands incidents versus requests, knows IT workflows and escalation rules, and integrates with ServiceNow out of the box. Domain-specific models trained on authoritative business data dramatically reduce deployment time and improve accuracy.

Evaluation questions buyers ask: What domains are natively supported? How does this differ from generic AI? What business ontology is built into the model?

Integration Depth Matters More Than Breadth

AI must integrate easily with knowledge systems, transactional systems like ticketing or CRM platforms, and identity and access platforms. Buyers look for pre-built connectors, robust APIs, proven deployments at similar enterprises, and clearly defined SLAs.

Surface-level integrations that simply read data aren’t enough. Buyers need AI that can write back to systems, trigger workflows, and operate within existing approval chains.

Trust Mechanisms Must Be Visible

Enterprise buyers scrutinize how hallucinations are controlled, whether AI is grounded in business data, if responses are filtered by role and permissions, and whether full audit trails are available.

They also expect clarity on data usage policies, model training boundaries, and compliance certifications. AI observability is no longer optional - it’s how enterprises manage risk while scaling autonomy.

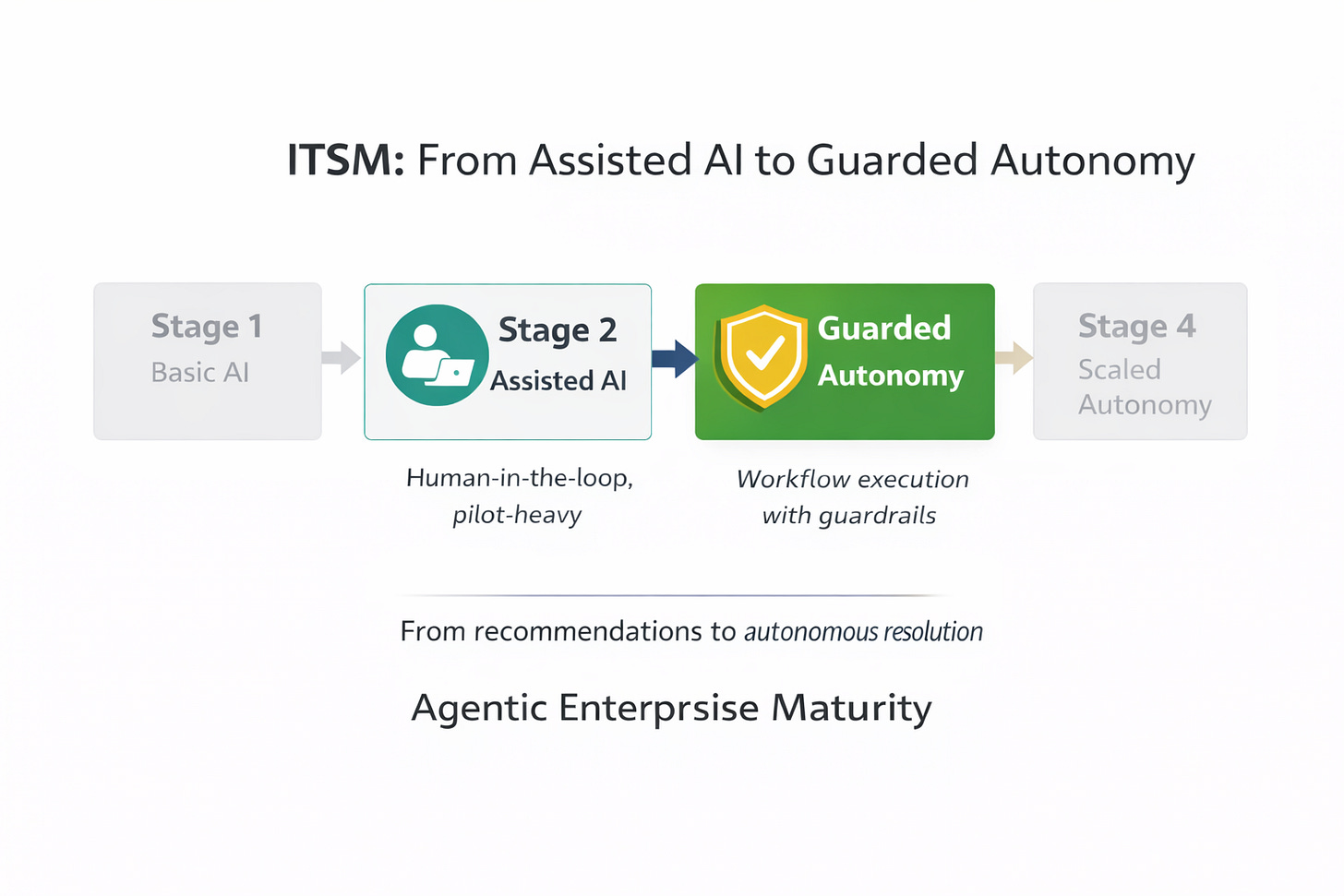

Case Study | ITSM from Stage 2 (Assisted AI) to Stage 3 (Guarded Autonomy)

This IT Service Management case study illustrates exactly how enterprises evaluate and approve AI systems when moving to Stage 3 (Guarded Autonomy).

Executive Summary

A large enterprise organization improved its IT Service Management efficiency by implementing a domain-specific agentic AI solution, achieving a 12% increase in auto-resolution rates and a 5-point improvement in employee satisfaction within six months.

Why the Stage 2 Approach Failed

As IT request volumes increased, the organization initially deployed a horizontal agentic AI platform and invested heavily in building custom automations. Despite early promise, the system stalled due to poor request-to-solution matching and high maintenance overhead. Each new use case required manual configuration, limiting scalability and ROI.

This mirrors a common Stage 2 (Assisted AI) failure mode: AI recommendations existed, but humans remained responsible for execution, tuning, and exception handling.

What Changed at Stage 3 (Guarded Autonomy)

The organization replaced the generic platform with a domain-specific ITSM agentic AI designed to meet Stage 3 (Guarded Autonomy) evaluation criteria:

Domain Intelligence

The AI models were trained on IT-specific terminology and concepts, enabling accurate interpretation of employee requests. The system’s ontology could be customized with organization-specific terms. For example, while “VPN” is standard IT terminology, the system learned that “Cisco AnyConnect” was the company’s specific VPN solution, allowing it to correctly route requests like “install AnyConnect” to the VPN provisioning workflow.

Integration Depth

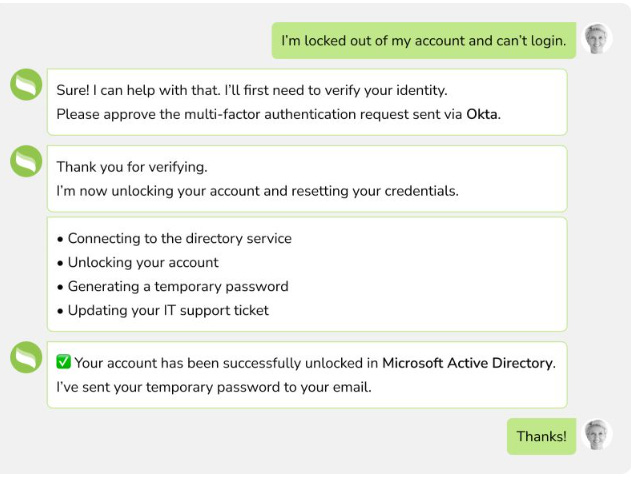

The AI operated directly within ITSM systems of record, executing identity verification, account unlocks, credential resets, directory updates, and ticket logging without human intervention.

Pre-Built Workflow Library

The platform included ready-to-deploy templates for common IT requests such as:

Password resets

Account unlocking

Laptop replacements

Software installations

These templates could be quickly configured and integrated into existing AI agents.

Natural Language Workflow Creation

For unique organizational needs, IT staff could create custom workflows using natural language instructions, eliminating the need for extensive technical configuration.

Note: This interaction shows an AI agent resolving a high-frequency IT access issue end-to-end identity verification, account unlock, credential reset, directory updates, and ticket logging without human intervention.

Results

This is what it looks like when domain + workflows + guardrails make autonomy possible.

Within six months of switching from a horizontal agentic AI platform to a domain-specific ITSM solution, the enterprise achieved clear performance improvements:

Auto-Resolution Rate: Increased by 12 percentage points

Employee Satisfaction (ESAT): Improved by 5 points

The prior vendor plateaued at ~75% auto-resolution, leaving a quarter of requests to be handled manually. By contrast, the Stage 3 (Guarded Autonomy) solution pushed resolution toward ~87%, unlocking compounding benefits: lower operational cost, faster resolution, higher employee satisfaction, and critically higher client retention as IT teams could reliably scale without adding headcount.

Why This Case Crossed the Gate to Stage 3 (Guarded Autonomy)

In the Agentic AI Maturity Model, the transition from Stage 2 (Assisted AI) to Stage 3 (Guarded Autonomy) is not a technical upgrade, it’s a trust decision.

At Stage 2 (Assisted AI), AI is allowed to recommend. At Stage 3 (Guarded Autonomy), AI is allowed to act.

That permission only comes when enterprises can see, control, and audit how decisions are made.

The difference wasn’t just better automation. It was visible trust.

Make Trust Visible Throughout the Sales Process

Early adopters of AI also uncovered some trust issues. Enterprises learned that the solutions have to stand up against misuse (ex: toxic conversion) and abuse (ex: prompt injection) and be able to scale to business needs and volume so they do not loose business or impact their brand reputation.

Stage 3 (Guarded Autonomy) buyers expect vendors to show, not claim:

Explain how responses are grounded in verified data.

Incorporate levers and configurations in the solution that will allow customers to tune results based on their needs.

How autonomy is constrained, escalated, or overridden

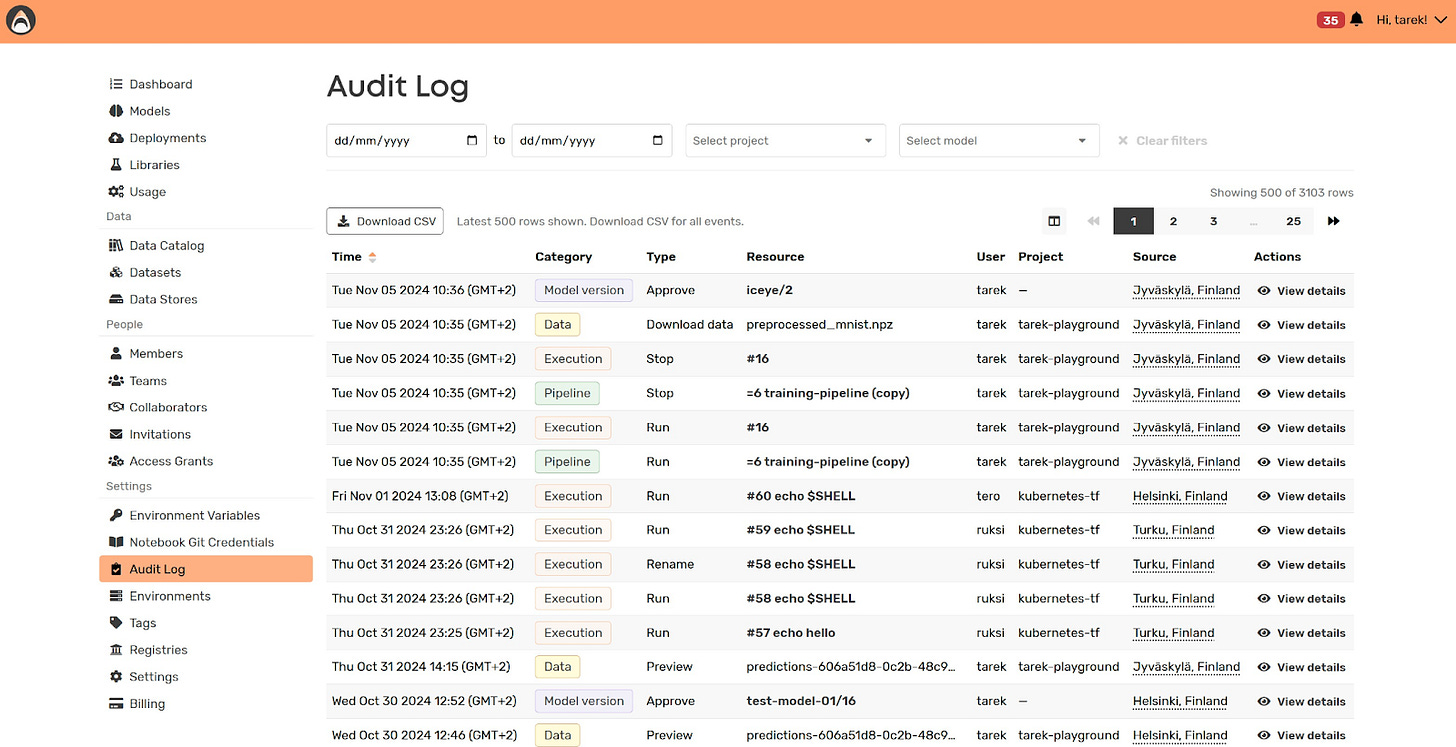

This is why AI observability becomes mandatory at Stage 3 (Guarded Autonomy). Buyers want to see why a decision was made, which data was used, what rules applied, and when humans intervened.

Explainability is the mechanism that converts probabilistic systems into enterprise software.

Don’t just tell buyers your AI is trustworthy, prove it by making the mechanisms visible.

In a financial operations use case, an enterprise used AI to assist with invoice validation and payment approvals. Early pilots revealed a critical concern while the AI identified anomalies effectively, finance leaders couldn’t explain why certain invoices were flagged or approved.

This lack of explainability stalled rollout. In regulated environments, “the model decided” is not an acceptable answer.

To move forward, the AI vendor had to expose trust mechanisms directly in the product. Each decision included an explanation trace showing which data points were used, what thresholds were applied, and whether any rules overrode the model’s recommendation. Finance teams could adjust sensitivity levels, enforce deterministic behavior for high-value transactions, and audit every action taken by the AI.

Note: Show’s the audit log of invoices, the rule on why it got rejected and the recommendation.

Key Takeaways

This case study isn’t just about better IT automation. It demonstrates how enterprises evaluate AI when granting autonomy. Domain expertise, packaged workflows, deep integrations, and visible trust mechanisms are not differentiators; they are requirements for crossing from pilot to platform.

If you’ve gotten this far, you may be ready to navigate to the 🔥 section and check out our Playbook now.

Case Study | Automotive from Stage 3 (Guarded Autonomy) to Stage 4 (Scaled Autonomy)

Once trust is established at Stage 3 (Guarded Autonomy), something important happens: autonomy stops being risky and starts becoming repeatable.

This is where companies move from Guarded Autonomy (Stage 3) to Scaled Autonomy (Stage 4).

Most AI-SDR products fail in enterprise sales for the same reason they stop at intelligence instead of owning the outcome.

Early versions of AI sales assistants could summarize leads, draft emails, or recommend follow-ups but they still required significant manual setup and human orchestration. That friction is manageable in SMB environments. In enterprise settings, it kills adoption.

This is where Manoj made a critical shift.

Instead of selling an “AI-powered SDR,” he redesigned the product to behave like an autonomous sales operator natively integrated into dealership CRMs, pre-configured for common automotive workflows, and capable of running end-to-end engagement without manual intervention.

This case study shows what happens after Stage 3 (Guarded Autonomy) works.

Executive Summary

A Midwest enterprise automotive dealership group achieved 5× pipeline growth and 10× ROI by deploying an AI digital assistant that operated as an autonomous sales operator - not a copilot.

After proving safety, reliability, and ROI in one showroom (Stage 3 Guarded Autonomy), the solution expanded across all four locations (Stage 4 Scaled Autonomy), becoming part of the dealership’s core revenue infrastructure.

Industry Context

Automotive sales representatives face the dual challenge of capturing and qualifying high-value leads while delivering exceptional customer service during major purchasing decisions. As dealerships grow, scaling human resources to maintain service quality becomes increasingly expensive and time-intensive.

Why the Stage 2 (Assisted AI) Approach Failed

Auto dealerships operate on thin margins and need to move inventory quickly to maximize revenue. While AI digital assistants promised to help sales teams engage leads through automated email conversations, early implementations faced significant adoption barriers:

Data integration friction: Lead information had to be manually extracted from various systems and uploaded to the AI platform

Time-intensive setup: Sales representatives needed to write initial email content before the AI could begin engaging prospects

Low adoption: The complexity of these workflows made the tool burdensome rather than helpful, limiting actual business impact

Without effective AI assistance, dealerships defaulted to hiring additional sales representatives. While this approach provided some relief, it introduced new challenges:

High recruitment and training costs

Extended onboarding timelines

Ongoing overhead from increased headcount

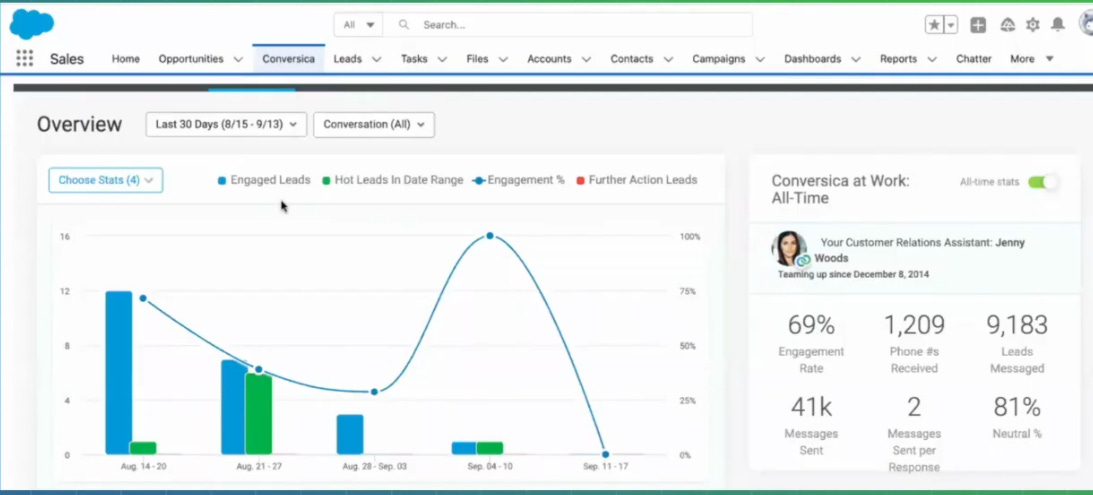

The Stage 3 (Guarded Autonomy) Redesign

The AI platform vendor redesigned the solution to eliminate friction points and accelerate time-to-value.

Here are the key components:

Seamless CRM Integration

Native integrations with automotive CRM systems (including Salesforce, VIN Solutions, and Tekion) enabled automatic synchronization of lead data. This eliminated manual data entry and positioned the AI assistant within the dealership’s existing technology ecosystem.

Industry-Specific Conversation Templates

Pre-built email conversation flows addressed the most common automotive sales scenarios:

Website visit follow-ups

Test drive scheduling

Post-event engagement

Inventory inquiries

Each template could be customized using simple natural language instructions, allowing sales teams to personalize communications without starting from scratch.

Automated Campaign Management

By connecting directly to the system of record, the platform enabled sales representatives to schedule recurring campaigns automatically, ensuring consistent lead engagement without manual intervention.

Notes: Screenshot to show how having your product integrate to the system of record is key to adoption and growth

Results

The impact was immediate and substantial. After the dealer implemented these enhancements at one showroom, the Midwest dealership group was so impressed that they rapidly deployed AI digital assistants across their remaining three locations.

Once autonomy proved safe and effective in one location, expansion was low-risk. The same system could be replicated across showrooms with minimal incremental cost.

This is the defining characteristic of Stage 4 (Scaled Autonomy): autonomy becomes an expansion engine.

Business Impact

Prior to the redesign, the AI-SDR product was capped as a low-scale SMB solution selling one-off licenses with limited expansion potential.

After adapting the product for automotive dealership groups, the company shifted to multi-location contracts and repeatable rollouts. This transformation enabled the business to grow from approximately $10 million in ARR to over $20 million in ARR, with automotive dealerships representing the majority of new revenue growth.

Key Insights

The real inflection point wasn’t better automation, it was proving that autonomy could be trusted and repeated. By integrating natively into dealership CRMs and packaging industry-specific workflows, the AI moved from assisting reps to owning outcomes end-to-end. Once that autonomy worked safely in one showroom, expansion across additional locations became a low-risk decision. This shift from insight to ownership unlocked a new growth model.

What Winners Do Differently

Lead with Packaged, Domain-Specific Solutions

The move from Stage 2 (Assisted AI) to Stage 3 (Guarded Autonomy) requires a fundamental change in how AI is packaged and sold. Stage 3 buyers aren’t looking for flexible platforms that can eventually be configured to own outcomes. They want solutions that are already opinionated, domain-aware, and ready to operate autonomously.

Winning vendors no longer sell generic “AI platforms” alone. Instead, they lead with domain-specific solutions (IT, HR, Facilities, Sales, Finance) that bundle domain ontology, pre-trained models, and production-ready workflows. These domain packs also include pre-built integrations into systems of record for that function. For example, an IT domain agentic AI understands concepts like incidents and service catalogs, ships with workflows for password resets and software requests, and connects natively to systems like ServiceNow or FreshService out of the box.

This kind of packaging is what allows enterprises to move from pilots to rollouts. It collapses time-to-value from months to weeks and reduces the operational risk of granting autonomy. At Stage 3, the biggest competitive advantage isn’t model sophistication - it’s how quickly and safely autonomy can be deployed.

Demonstrate Action, Not Just Intelligence

As AI capabilities have advanced, enterprise expectations have risen in parallel. Stage 2 (Assisted AI) systems retrieve knowledge and make recommendations. Stage 3 (Guarded Autonomy) systems take action.

In practice, this means AI demos must go beyond insight and show end-to-end task ownership inside real systems. A knowledge-based AI might explain why pipeline dropped last week by summarizing CRM data but a human still has to interpret the insight, decide what to do, and execute changes across multiple tools.

An agentic AI operating at Stage 3 (Guarded Autonomy) goes further. It detects a drop in inbound lead response time, correlates it with staffing gaps and routing rules, updates assignment logic directly in the CRM, triggers alerts to sales managers, and launches a corrective workflow — all within clearly defined guardrails.

Pilot or Trial offers

Because Stage 3 (Guarded Autonomy) introduces autonomy, pilots must be designed differently. These are not open-ended experiments to discover use cases. Stage 3 (Guarded Autonomy) pilots are scoped to prove trust.

In crowded AI markets where many vendors claim similar capabilities, successful companies reduce buyer risk through tightly bounded proof points: time-bound POCs, usage-limited trials, or department-specific deployments with clear success criteria. Examples include a 90-day POC running alongside an existing system or a capped-volume deployment limited to a single business unit.

The goal of these pilots is not learning - it’s validation. Enterprises use them to confirm that the AI behaves predictably, integrates cleanly, and delivers measurable outcomes before granting broader autonomy. Vendors that understand this distinction move through Stage 3 (Guarded Autonomy) faster; those that don’t remain stuck in perpetual pilots.

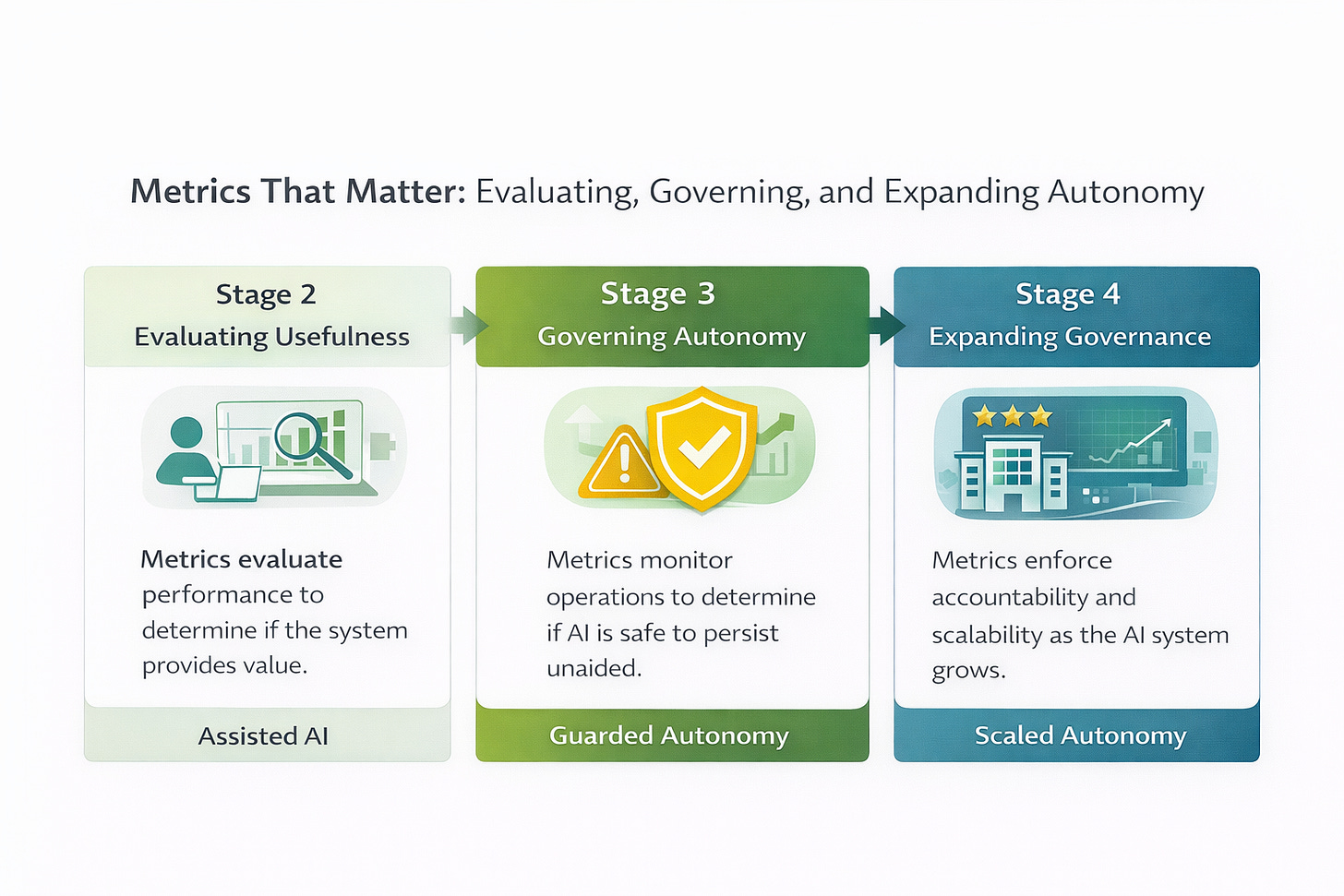

The Metrics That Matter

In the Agentic AI Maturity Model, metrics are not just performance indicators, they are control mechanisms. Enterprises use them to decide whether to grant more autonomy (move from Stage 2 AI Assist to Stage 3 Guarded Autonomy), expand deployment (move from Stage 3 Guarded Autonomy to Stage 4 Scaled Autonomy), or roll autonomy back entirely.

As AI products mature and enter real enterprise environments, leaders shift from asking “Does this work?” to “Does this create measurable value at scale, safely?” That question can only be answered through outcome-driven metrics tied directly to business impact.

Note: As AI systems mature, metrics evolve from feedback signals to control systems. In Stage 2 (Assisted AI), metrics help teams judge whether AI responses are useful. In Stage 3, those same metrics become gating mechanisms determining when autonomy is allowed, constrained, or rolled back. By Stage 4, metrics function as governance infrastructure, enabling enterprises to safely scale autonomy across teams, workflows, and business units.

Enterprises track a combination of effectiveness, containment, and experience metrics to understand how well an AI agent is performing and whether it can be trusted with greater autonomy:

As AI products mature and get deployed in enterprises it becomes increasingly important to understand if this product is creating measurable value at scale. Customers want to measure task effectiveness and outcomes to understand how AI is able to help their business

Some key product metrics that are used to optimize Agentic solution

Auto resolution rate - AI agent was able to successfully respond to Employee or customer

First Contact resolution (FCR) - User got response from Agentic AI without escalating to human agent and did not contact business again in the next 72 hours for the same request

Response Accuracy rate - How well the response answers the user question. This may be measured via feedback (thumbs up/down) from end user

Assisted Rate - AI agent was able to partially answer user query even though they may be escalated to human agent

CSAT / ESAT - Customer or Employee satisfaction measured post facto to their contact experience usually via survey

Enterprises don’t look at these metrics in isolation - they use them to derive financial and operational impact. For example, a customer service organization may focus on increasing auto-resolution rate. If the AI resolves X additional requests per month and the average cost per human-handled contact is $Y, the business can directly quantify $(X × Y) in cost savings.

These metrics also influence expansion decisions. Sustained improvements signal that the AI can be deployed across more teams, higher volumes, or additional geographies - the defining move from Stage 3 (Guarded Autonomy) to Stage 4 (Scaled Autonomy).

The Bottom Line

Enterprise buyers are no longer buying AI because it’s impressive. They’re buying AI because it solves real problems, acts autonomously and safely, delivers measurable ROI, integrates cleanly into their existing ecosystem, and can be trusted at scale.

The vendors who win are the ones that combine agentic capability, deep domain expertise, strong packaging, and enterprise-grade trust and then prove it with real metrics in production. If your AI product can’t demonstrate these elements clearly and quickly, enterprise deals stall, pilots linger in Stage 2 (Assisted AI), and momentum fades.

The real challenge, the “so what?” - is this: getting to ~$10M ARR with AI isn’t that hard anymore. A strong demo, early adopters, and a compelling use case can get you there.

Getting past that is where most AI companies struggle. Just like in B2B SaaS, the next phase of growth is won by teams that know how to compete at the enterprise level crossing the gap from assisted AI to guarded autonomy across trust, integration, governance, and ownership of outcomes.

Enterprise buyers want AI to succeed.

They’re actively looking for vendors who understand their reality and can deliver value safely, repeatedly, and at scale. The companies that meet them there don’t just close deals they unlock expansion, standardization, and durable enterprise businesses.

🔥 INSIDER GROWTH PLAYBOOK: From AI Pilots to Scaled Enterprise Revenue

Everything we covered above points to the same reality: most AI products don’t fail because the technology is weak. They fail because teams don’t know how to cross the gap from interesting pilot to enterprise-scale deployment.

So we built this playbook to answer one question we get over and over again:

“What do we actually need to change to make our AI enterprise-ready?”

This playbook is a practical guide for founders, product leaders, and GTM teams who want to:

Diagnose where their AI product sits in the Agentic AI Maturity Model

Understand why pilots stall between Assisted AI and Guarded Autonomy

Make the minimum upgrades required to unlock enterprise scale

This playbook is a practical guide for founders, product leaders, and GTM teams who want to turn AI pilots into scalable enterprise revenue.

Identify What Stage of Maturity You’re In

If you misdiagnose your stage, everything downstream breaks. Enterprise scale stalls when teams try to skip steps.

Below is how to correctly identify where you are today.

Stage 1: Experimental AI (Pilot-Only)

What This Looks Like

AI features launched as copilots, chatbots, or assistants

Primarily focused on knowledge retrieval or summarization

Demos perform well; production usage is limited

Value depends heavily on humans interpreting and acting on outputs

Why Enterprises Get Stuck Here

AI creates insight, but doesn’t own outcomes

High cognitive load on users

No clear ROI story beyond “productivity”

Enterprise Buyer Signal

“Interesting, but we’d need to build a lot around this.”

Minimum Requirements to Move Forward

Identify one business workflow the AI will fully own (not assist)

Define a clear success metric (e.g., auto-resolution rate, lead response time)

Limit scope intentionally breadth kills pilots

If your AI stops at recommendations, you are still in Stage 1.

Stage 2: Assisted AI (Human-in-the-Loop)

What This Looks Like

AI analyzes data and recommends actions

Humans approve, edit, or trigger execution

Early pilots begin inside real teams

Some ROI appears, but scaling is slow

Common Failure Model

Human bottlenecks prevent scale

Inconsistent usage across teams

Enterprises struggle to justify broader rollout

Enterprise Buyer Signal

“It works, but it still depends too much on our people.”

Minimum Requirements to Move Forward

Introduce decision boundaries (what AI can do autonomously vs. not)

Reduce manual setup with pre-built workflows

Show repeatability across teams or locations

If humans are required for every meaningful action, autonomy is capped.

Stage 3: Guarded Autonomy (Enterprise-Ready)

What This Looks Like

AI executes end-to-end workflows autonomously

Clear guardrails define where AI can act

Integrated directly into systems of record

Human escalation is the exception, not the default

Why This Is the Enterprise Inflection Point

This is where:

Pilots turn into rollouts

Single-team usage turns into multi-department adoption

SMB motions become enterprise motions

Enterprise Buyer Signal

“We can deploy this safely across the organization.”

Non-Negotiable Capabilities

Agentic execution (AI completes tasks, not just suggests)

Domain-specific packaging (IT, Sales, Finance, etc.)

Configurable trust controls (confidence thresholds, escalation rules)

Observable decision-making (logs, traces, explanations)

If trust mechanisms aren’t visible, autonomy won’t be granted.

Stage 4: Scaled Autonomy (Enterprise Expansion Engine)

What This Looks Like

AI operates as part of core business infrastructure

Autonomy scales safely across teams, regions, and volumes

ROI is measurable and visible

Expansion happens organically

What Winning Companies Do Differently

Sell outcomes, not features

Package AI by domain and use case

Design for expansion from Day 1

Treat trust, observability, and governance as product features

Enterprise Buyer Signal

“This is now part of how we run the business.”

The 30–60–90 Day Enterprise AI Upgrade Plan

Days 0–30: Diagnose and Focus

Identify your current maturity stage

Pick one workflow to fully own

Define one metric that matters to the buyer

Days 31–60: Package for Enterprise

Build domain-specific workflows or templates

Integrate natively into systems of record

Introduce guardrails and confidence thresholds

Days 61–90: Prove Trust and Scale

Ship AI observability (logs, traces, explanations)

Run a scoped enterprise pilot with expansion intent

Tie usage directly to ROI metrics

Gotchas: Why Enterprise AI Pilots Still Fail

Selling intelligence instead of ownership

Generic platforms forced into domain-specific problems

Trust mechanisms hidden behind marketing claims

No clear expansion path after the pilot

ROI discussed verbally, not measured in-product

If any of these are true, scale will stall.

The Enterprise AI Litmus Test

Before selling to enterprise, ask:

Can our AI complete a task end-to-end?

Can buyers see why decisions were made?

Can risk be configured, not just avoided?

Can success be measured without manual analysis?

Is expansion designed or accidental?

If the answer is “no” to more than one, you’re not enterprise-ready yet.

If you’re building AI for enterprise and pilots aren’t expanding the way you expected, we’ve seen this pattern many times before. Happy to share what’s worked and what hasn’t.