Your AI Feature Didn’t Work. Here’s Why.

A tactical guide with case studies for designing systems that scale impact, reduce friction, and turn cost centers into growth engines.

👋 Hi, it’s Gaurav and Kunal, and welcome to the Insider Growth Group newsletter—our bi-weekly deep dive into the hidden playbooks behind tech’s fastest-growing companies.

We give you an edge on what’s happening today: actionable strategies, proven frameworks, and access to how top operators are scaling companies across industries. This newsletter helps you boost your key metrics—whether you're launching a product from scratch or scaling an existing one.

What We Stand For

Actionable Insights: Our content is a no-fluff, practical blueprint you can implement today, featuring real-world examples of what works—and what doesn’t.

Vetted Expertise: We rely on insights from seasoned professionals who truly understand what it takes to scale a business.

Community Learning: Join our network of builders, sharers, and doers to exchange experiences, compare growth tactics, and level up together.

Introduction: The False Comfort of Product Narratives

Scroll through LinkedIn lately and you’ll see the AI victory lap refrain: “We shipped v1!” “Now with AI!” “AI-powered [insert noun here]!” Likes and buzz trample over substance and impact.

Take the Xbox job post. Principal Dev Lead Mike Matsel shared a recruiting image with Xbox branding, inviting submissions from “folks with experience in device drivers, GPU performance…”—right after mass layoffs. The AI-generated graphic that accompanied it was an embarrassment, with the monitor output hilariously flipped toward the viewer. Commenters didn’t hold back: “This is so tone deaf that I hope it is satire,” one wrote, while another noted the irony in using sloppy AI to hire designers just after cutting so many jobs.

Or Duolingo’s CEO declaring the company would become “AI-first”, with “AI proficiency” influencing hiring and promotions. On paper, it’s an ambitious vision—one that could transform the company if executed with care, transparency, and a genuine focus on user value. But more often than not, moves like this end up as blunt instruments: alienating teams, spooking customers, and reducing AI to a compliance checkbox rather than a catalyst for better outcomes.

Then there’s Figma’s Make launch—a headline-grabbing AI prototyping tool positioned as a leap forward. But compared to v0, it’s a step back. It doesn’t address the real opportunity in collaborative design—it just ticks the “we have AI too” box.

And here’s the thing—you hear about these launches, and then… nothing. No adoption stories. No systems evolving. Just another hype post fading into LinkedIn’s algorithmic abyss.

First-principles thinking remains our North Star—AI doesn’t change that. The point of Product isn’t just building quickly; it’s asking why we’re building. With AI, the hard work shifts toward the most fun part of the cycle—exploration, validation, system design—while execution becomes faster and cheaper. That means there’s also more junk flooding the world. Our job? Make products people actually use. That’s the real point of Product.

To help illustrate how fundamentally important this is, we partnered with Jerrell Taylor, an AI Strategist with Distly.ai to co-author this piece with us and offer each of you with a playbook on how to implement AI for lasting change.

What AI Can and Can’t Do

The first commercially successful steam engine, built in 1712, had one job: pumping water out of a coal mine. It was a miracle of engineering that solved a single, expensive problem. For decades, that's all steam power was. Just a better pump applied to an old process. No one looked at that engine and saw the Industrial Revolution. They saw a dry mine.

You are making the exact same mistake with AI today. You're buying a very expensive pump.

Most companies are celebrating AI for its obvious talents: faster pattern recognition, task automation, and summarization. But they are applying this incredible new power source to the same broken system that created their problems in the first place.

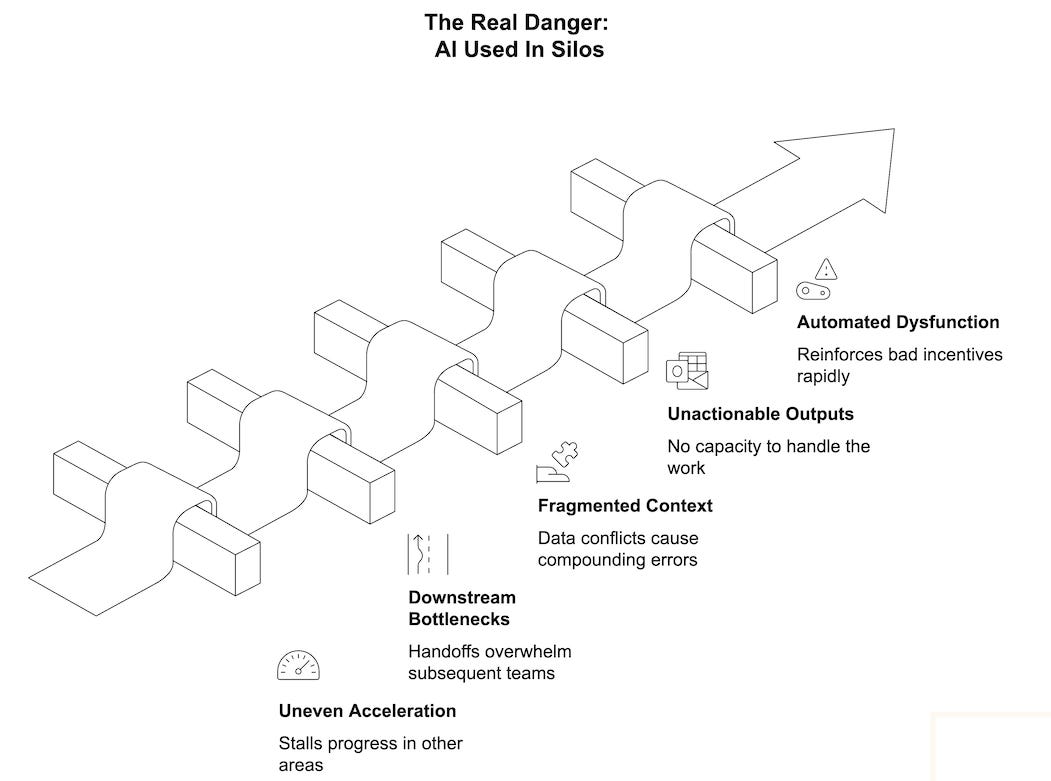

The real danger isn't that AI will fail; it's that applying it thoughtlessly will succeed just enough to create chaos. It speeds up one team while creating massive bottlenecks for the next. It generates reports nobody has capacity to act on. It automates a task without changing the broken incentive structure that governs it.

Unstructured coordination is the real bottleneck, and AI alone cannot solve it.

To work across a business, AI requires two things that most organizations completely lack:

A Unified Context: Without a single, shared understanding of reality, AI is just a powerful idiot, optimizing tasks based on fragmented and contradictory inputs. It can't generate system-wide coherence if you're feeding it organizational chaos.

An Architecture for Coordination: This isn't about plugging in a tool; it's about building a system. That requires:

Composition: the blueprint for how pieces fit together and actors connect in shared workflows

Governance: the rules, incentives, and metrics that dictate behavior within that system

Decision-Making & Execution: the logic for how choices are made and executed

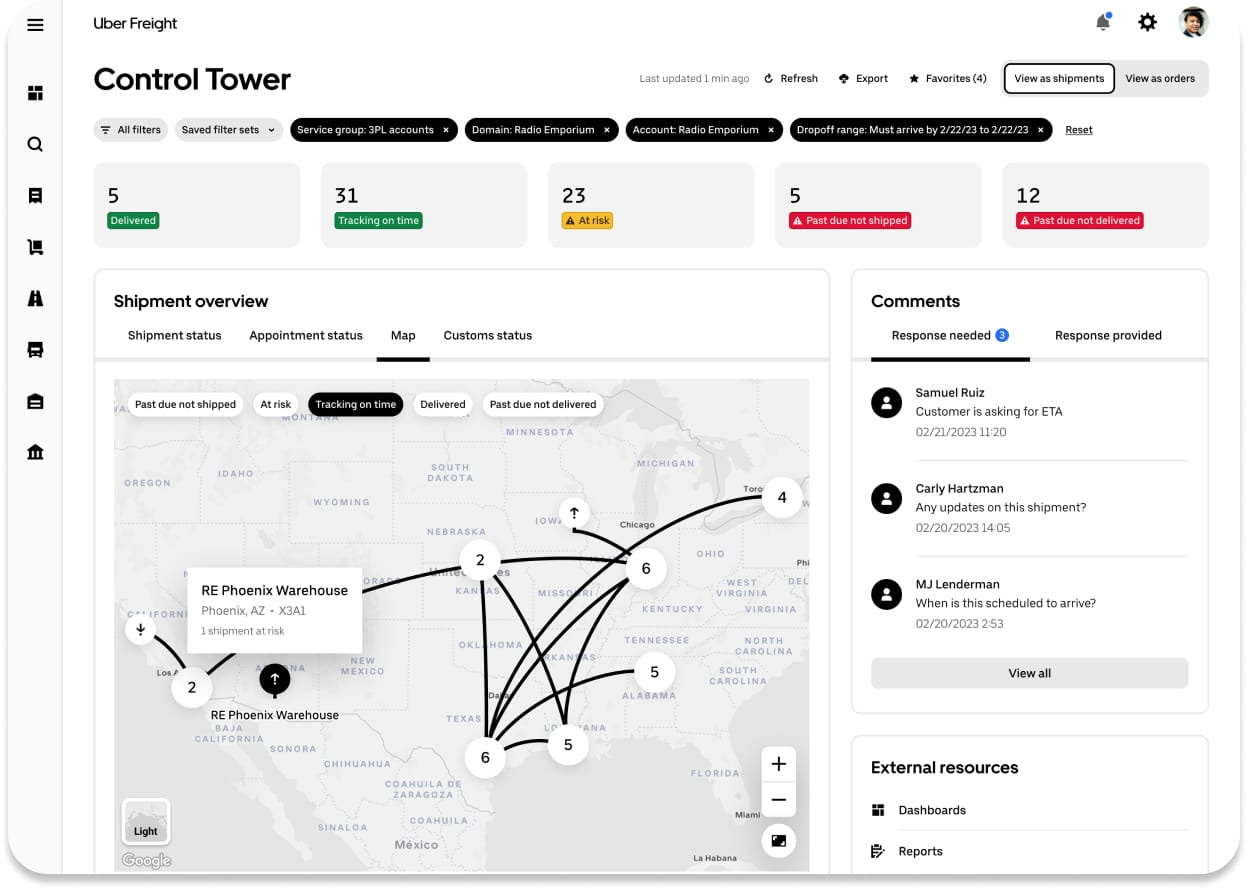

Look at what happened to trucking. For decades, a trucker’s job was a complex mix of driving, negotiation, and relationship management based on informal trust. Then platforms like Uber Freight and Convoy imposed a new architecture.

Uber Freight’s Transportation Management System Dashboard.

They started by changing the Representation of the work itself, reducing a complex, judgment-driven job into a few measurable metrics that the platform could track. Once reality was represented by their data, they seized control of Decision-Making; algorithms now decide pricing, routing, and load assignments, removing the trucker's ability to negotiate value. The driver's role was then reduced to pure Execution. The job itself was unbundled and rebundled—a new Composition—into an algorithmically managed workflow. Finally, they enforced a new form of Governance, defining performance through narrow metrics and removing the autonomy that came with informal reputations and trust.

The platform didn't help truckers do their old job better. It used the levers of coordination to make their old skills irrelevant, fundamentally changing the system and their role within it.

The question now isn't whether AI can help you work faster. The question is: does your current role have any right to exist when its foundational constraints are gone?

The Myth of the Linear Product Cycle

Most product teams still think in the same neat, linear stages: Discover → Build → Launch → Measure. It’s tidy, it’s intuitive—and it’s a dangerous oversimplification.

In reality, products live inside systems, and systems are recursive and layered. One release doesn’t mark the end of the work—it triggers ripples across user flows, data pipelines, integrations, and operational processes.

A new feature isn’t a self-contained unit. It sits inside an ecosystem of other features, workflows, and dependencies. Touch one part, and you inevitably affect others—sometimes in ways your roadmap never accounted for.

Example 1 – AI lead scoring in a CRM: A sales org adds AI-powered lead scoring to prioritize outreach. Local performance looks great—top leads get called faster. But the scoring model relies on data marketing doesn’t capture, forcing marketing to rework forms and campaigns. Conversion improves slightly, but campaign costs spike.

City planning parallel: It’s like adding a new freeway off-ramp without improving the connecting roads—traffic moves faster in one spot, then jams up elsewhere.Example 2 – Chatbot for customer support: A company launches an AI chatbot to handle FAQs and reduce ticket volume. It works—ticket volume drops—but now edge cases pile up for human agents, slowing resolution times for complex issues. Support quality metrics worsen even though the bot’s success rate is “high.”

City planning parallel: Imagine building an express bus lane but routing all remaining traffic through a single narrow street. You solve congestion in one area but create bottlenecks in another.Example 3 – AI-assisted content creation tool: A newsroom adds AI to help reporters draft articles faster. Output volume rises, but editing teams are overwhelmed by fact-checking and style corrections. The bottleneck just moves downstream.

City planning parallel: This is like constructing a massive new residential tower without expanding the water lines or power grid—the new capacity strains the infrastructure that supports it.

The problem? Most organizations optimize for local performance (“Did this feature hit its adoption target?”) instead of global throughput (“Did this change improve the whole system’s speed, resilience, and value delivery?”).

And because the system is interconnected, solving one problem often creates new friction elsewhere. That’s why adding yet another feature can slow onboarding, confuse users, or break downstream workflows.

What’s needed instead is governed, modular composition—designing like a city planner, not a developer dropping buildings at random. In a city, you don’t just plop a skyscraper wherever there’s space; you consider roads, utilities, zoning, and how it will connect to what’s already there. Product systems deserve the same level of intentionality.

Reframing the Why – Jobs to Be Done at the System Level

For years, “Jobs to Be Done” has been the playbook for product teams. You zero in on the user’s immediate goal — “When I’m a business traveler, I want to check in quickly so I can get to my meeting.” It’s a great way to make one step smoother.

But the problem is that in the AI era, that focus can blind you. You end up perfecting a single step in a process that’s broken end-to-end. AI blows up the old constraints those jobs were written for — which means the real opportunity isn’t shaving seconds off a task, it’s re-wiring how the whole system works.

Most companies default to making individual steps faster or cheaper. The smarter move is to design the system so every part works in sync, all coordinated toward a single outcome.

Case Study #1: Coordinate Operations Around Revenue, Not Just Efficiency

Problem

A Fortune 500 HVAC company Jerrell worked with was hemorrhaging money on onsite visits that were taking too long. This decreased the number of onsite visits a field tech could perform daily, which ultimately minimized potential revenue. The general concern: field techs were spending too much time looking up information related to the equipment they needed to fix.

The obvious JTBD seemed clear: “Help field techs find repair manuals and parts information faster.”

The team built an AI assistant for instant technical documentation lookup. It shaved a few minutes off each visit, but the bigger issues (low upsells, unplanned repeat visits, wasted truck rolls) remained untouched.

Solution: System-Level Coordination Powered by AI

Jerrell reframed the goal: “How can the entire service operation work together to grow revenue?”

Instead of just speeding up manual lookups, the team designed a coordinated service system where AI linked:

Route planning with inventory availability

Equipment diagnostics with customer purchase history

Predictive maintenance schedules with seasonal demand windows

Now, every tech dispatched arrived knowing:

Which equipment was near end-of-life

Which upgrades the customer was most likely to approve

Which parts were in-stock for same-day installation

Impact

+40% upsell conversion

Technicians consistently turned routine calls into high-value sales

Repeat truck rolls dropped because jobs were completed on the first visit

Lesson: Don’t use AI to make today’s jobs faster — use it to redesign the job so every action directly drives the top business outcomes.

Case Study 2: Design Systems That Anticipate and Act, Not Just Respond

Problem

Another project Jerrell worked on was with a hotel chain that wanted to improve guest satisfaction. Bookings were down. Reviews hinted at the cause: “Staff was polite, but it felt like they just wanted to get me out of line.” Guests weren’t angry — they were disengaged.

The quick fix practically wrote itself: give front desk clerks an AI tool that could spit out pre-written answers to common questions, plus upsell scripts for the occasional room upgrade.

The team built it and it did exactly that. Clerks could respond faster, stick to the script, and check people in with military precision. And yet… nothing meaningful changed. Bookings didn’t bounce. Spend per guest stayed flat. The experience was still the same thin layer of transactional service — just delivered at speed.

Solution: AI-Driven Guest Journey Coordination

Jerrell shifted the question from “How do we answer faster?” to “How do we anticipate and maximize guest value?”

AI became the hub linking:

Guest preference profiles from past stays

Real-time room availability and staffing levels

Local events, weather, and seasonal packages

This let the system proactively design personalized stays:

Recommending spa treatments during rainy days

Timing restaurant offers to guest schedules

Suggesting local experiences aligned with guest interests

Impact

+23% ancillary revenue (upsold amenities, experiences, and services)

+18% lift in repeat bookings

Higher guest satisfaction scores from personalized service flow

Lesson: Use AI to unify data, timing, and activities across every customer touchpoint so that actions compound into measurable value for your user.

Most product teams are still asking the wrong question. They're optimizing for how people navigate broken systems instead of asking what becomes possible when those systems are rebuilt from the ground up.

Old Approach vs New Approach

The Business Impact of Coordination

In most companies, the way the org is structured directly shapes the way the product behaves. If teams operate in silos, customers feel it — through disjointed workflows, inconsistent messaging, and features that don’t quite fit together. Poor internal coordination doesn’t just create inefficiency; it fragments the user experience.

Coordination is often mistaken for a tax on speed — extra meetings, endless Slack threads, more process. But in reality, it’s an investment. Done well, it compounds in value over time, paying dividends in faster execution, cleaner handoffs, and a product that feels whole. And critically — it’s not process for process’ sake. It’s about aligning the entire system around the end user and the core business problem you’re solving, so every decision moves in the same direction.

If you’re badly coordinated around the problem, you’re badly coordinated overall — and it will show. It will show in how slow you move, in how messy your execution feels, and in the cracks your users encounter. It also reveals a deeper issue: you don’t truly understand the problem. And when that’s the case, there’s a high chance the user won’t feel the difference you set out to make.

Take onboarding as an example.

The real problem: new users need to reach their first moment of value quickly and confidently. If they stall or get confused, they never experience the payoff your product promises — adoption drops, retention suffers, and all the money you spent acquiring them is wasted.

In a poorly coordinated org, each team makes changes in isolation:

Marketing’s tweak: Adds three new questions to capture richer lead data.

Product’s tweak: Inserts an interactive tutorial relevant to only one segment.

Compliance’s tweak: Requires document verification earlier to reduce fraud.

Support’s tweak: Adds a mid-flow help widget that opens in a new tab.

Each change makes sense locally — but together, they bloat the flow, turn 90 seconds into 7 minutes, double drop-off, and tank activation. Different product lines even run different versions, multiplying the work for marketing, sales, and analytics.

Here’s the contrast:

The takeaway: coordination isn’t about adding process — it’s about building a shared mental model of the problem, then attacking it in a way that makes every change push toward the same outcome. AI can supercharge execution, but only if the system is already aligned on what matters.

When systems are designed with coordination at the core, what used to be seen as a “cost center” — operations, support, enablement — starts generating growth. Shared playbooks, integrated tooling, and feedback loops enable repeatability. Repeatability enables scale. And scale, done right, drives margin.

In the AI era, where capabilities can be cloned in days, your enduring advantage won’t be what you build — it will be how well you coordinate. And the only coordination that matters is the kind that keeps the user’s problem at the center. That orchestration is the moat.

IGG Playbook – Tactics for Designers, PMs, and Builders

Think of this as your operating manual for building in the AI era—small, intentional actions that compound into system-wide leverage. Tackling the system doesn’t mean doing everything; it means identifying the few nodes where change will create the widest ripple effect.

1. Daily Behaviors that Reinforce System Coherence

Design for context reuse, not just feature delight

Every new capability should plug into existing context flows so it strengthens the ecosystem. Avoid one-off “feature islands.”

Example: A healthcare app’s new “upload insurance card” flow also populates billing, appointment, and pharmacy systems—so the same data improves multiple journeys.Evaluate with system-level metrics, not just local KPIs

Measure improvements across the whole journey—time to complete a job, reduction in hand-offs—not just success of one step.

Example: Instead of only tracking chatbot resolution rate, measure “time to resolution” across all support channels after deploying the bot.Prioritize interfaces that close feedback loops

Build touchpoints that capture real user signals (errors, satisfaction, drop-offs) quickly so the system adapts in real time.

Example: An AI writing tool flags when users undo an auto-edit, instantly retraining the model to avoid that change in the future.

2. Structuring Sprint Rituals Around System Health

System Check-In at Sprint Planning – Before committing, review cross-team dependencies, upstream/downstream effects, and integration points.

System Impact Review in Retro – Ask “Did we reduce friction in the broader flow?” and “What new dependencies did we create?”

Cross-Functional Pairing in Grooming – Designers, PMs, and engineers jointly assess systemic impact—so decisions aren’t made in functional silos.

Example: An AI-powered real estate pricing feature is reviewed not just for algorithm accuracy, but for its effect on lead conversion, listing views, and sales team workflows.

3. Re-Center on JTBD in the AI Era

With AI, many jobs can be solved with smaller, more elegant system changes rather than heavyweight new builds.

Always start with the Job to Be Done, not the AI tool.

Ask: If this job could be done automatically or with less interface complexity, what’s the lightest system design that would enable it?

Avoid overbuilding—system leverage means picking the smallest set of changes that create the widest ripple effect.

Example: Instead of building a new “AI deal room” for real estate agents, embed an AI summary widget directly into the existing listing view—solving the “need to brief clients quickly” job without another tool or login.

4. Standing Up a Coordinated System (12-Week Roadmap)

Start by finding the few points that matter most. Improve them. Measure. Repeat.

Quick Glossary for Builders

Node – Any step, service, or data point in the user’s journey. Think of it like an intersection in a city: if it’s congested, everything slows down.

Core Node – A node that touches many other parts of the system. Fixing it creates ripple effects everywhere.

System Impact Section – A short write-up in your PRD or ticket that asks: How will this change affect the broader system, not just this feature?

Leverage Mapping – The process of drawing out the end-to-end job (JTBD), marking all the nodes, and circling the few that have outsized influence.

Weeks 1–2 – Find the Core Nodes

What to do:

Map the job-to-be-done from start to finish. Each step = a node.

Circle the core nodes — the ones that appear in multiple flows or feed many others.

Define 2–3 system-level metrics (e.g., time to complete the job, hops removed).

Example (Telehealth):

JTBD = “Complete a patient visit.”Nodes: Appointment booking, Insurance verification, Doctor’s notes, Prescription.

Core nodes: Insurance verification and Doctor’s notes.

Metrics: Visit completion time, duplicate data entry, drop-off rate.

Weeks 3–4 – Wire & Ship the First Change

What to do:

Add feedback capture to your first core node (e.g., “Was this correct?” prompts, undo signals).

Ship a thin, reversible improvement.

Measure against system-level metrics.

Example:

First node = Insurance verification.Add a simple confirmation step when patients upload cards.

Improvement: Auto-fill insurance info into the patient profile.

Impact: Faster visits, fewer dropped calls.

Weeks 5–6 – Address the Next Node

What to do:

Move to the second core node.

Add feedback wiring.

Ship a thin-slice improvement.

In every PRD, include a System Impact Section (describe ripple effects).

Example:

Next node = Doctor’s notes.Capture when doctors delete or fix AI transcriptions.

Thin-slice: AI transcription embedded directly in notes screen.

Retire the old “upload dictation” step.

Weeks 7–8 – Close the Loops

What to do:

Review both nodes together.

Tighten connections (share context between them).

Update your Leverage Map — the diagram of nodes, core nodes, and flows.

Example:

Insurance verification and notes now connect.Verified insurance shows inline in the notes interface.

Doctors no longer ask patients for info they already entered.

Updated leverage map shows fewer hops for both patient and doctor.

Weeks 9–10 – Iterate & Scale

What to do:

Identify the next core node.

Repeat the improve–measure cycle.

Expand feedback wiring to more touchpoints.

Example:

Add the Patient profile node.Consolidate data flows from insurance, notes, and prescriptions.

Feedback loop: track when patients correct personal details.

Outcome: Faster visits and fewer pharmacy mismatches.

Weeks 11–12 – Institutionalize

What to do:

Make leverage mapping and reviews part of sprint rituals.

Require a System Impact Section in every PRD.

Set a quarterly target: improve or retire at least one core node.

Example:

New JTBD = “Refill a prescription.”Core nodes = Prescription data + Pharmacy integration.

Team now runs the same playbook: map → circle nodes → wire feedback → ship thin slice → measure → update map.

✅ By Week 12:

You have a living leverage map

2–4 core nodes improved with feedback wired in.

Redundant flows retired.

A repeatable method for finding and fixing the next leverage point.

Questions to Ask Before Building with AI

AI gives us the power to build and ship faster than ever before. But speed creates its own traps. The old truth that not everything is worth building hasn't changed; what's changed is the catastrophic cost of getting it wrong.

When you build with AI, you're not just creating a feature; you're embedding logic deep into your company's nervous system. Building the wrong thing doesn't just create waste; it scales dysfunction at an unprecedented rate.

So, how do you ensure you’re building a scalable system instead of a scalable screw-up?

The good news is there's a way to solve this. It starts by asking four systemic questions before you go headfirst into development. To see this framework in action, consider the quietly devastating example of AI-as-coordination happening in your garbage can.

Waste Management didn't just add sensors to dumpsters. They turned the entire concept of "trash pickup" into an algorithmic system, and it provides a perfect playbook.

Here's how to apply that thinking:

1. The Representation Question: Are you creating truth or automating lies?

For decades, garbage collection was based on schedules and guesswork. "We pick up every Tuesday" regardless of whether bins were empty or overflowing. Waste Management eliminated the fiction. Smart sensors in dumpsters, trucks, and processing facilities create real-time "waste intelligence"—exactly how full every container is, what type of waste, optimal pickup timing, contamination levels. Mathematical precision about what actually needs collection.

Before you build, ask: Is your AI translating messy inputs, or is it enforcing a standard that creates unified context for reliable decision-making?

2. The Composition & Governance Question: Are you reducing friction or scaling chaos?

Waste Management didn't give garbage trucks better GPS. They made traditional waste management routes obsolete. Their AI coordinates drivers, processing facilities, recycling centers, and customer billing in unified workflows where trucks only visit containers that actually need emptying. No more driving predetermined routes to empty bins. Pure algorithmic coordination from sensor data to optimized collection.

Before you build, ask: Does this tool pull all actors into a shared process that reduces system-wide friction, or does it give them another place to hide, ultimately scaling your coordination problems?

3. The Decision-Making & Execution Question: Are you helping humans or replacing judgment?

Waste Management's AI makes decisions that used to require armies of route planners and dispatchers: which trucks go where, when to empty containers, how to optimize fuel consumption, which facilities can handle which waste streams. Drivers aren't planning routes. They're executing algorithmic instructions. The system coordinates thousands of micro-decisions across multiple cities simultaneously.

Before you build, ask: Where is decision-making authority moving? Are you helping humans do their old job faster, or are you embedding your best strategic logic into a system that executes at a scale no human team ever could?

4. The Feedback Question: Are you measuring tasks or system health?

Waste Management doesn't track "trucks dispatched" or "bins collected." They monitor the health of entire urban waste ecosystems: contamination rates, recycling efficiency, fuel optimization, predictive maintenance across fleets. Humans aren't managing individual routes; they're evaluating whether the entire waste-flow prediction engine is optimizing city cleanliness.

Before you build, ask: Is the human-in-the-loop designed to correct AI mistakes, or are they positioned to evaluate the holistic impact of the entire system, closing the feedback loop on what truly matters?

Waste Management succeeded because they understood that garbage collection wasn't their product. Urban efficiency coordination was their product. They built a system so superior that cities are redesigning their entire waste infrastructure around algorithmic intelligence instead of human schedules.

The challenge today isn't finding a use case for AI. It's having the organizational courage to ask these questions and act on the answers, no matter which legacy roles and processes become obsolete.

🔥 System Design is the AI Era's Missing Leverage

In the AI era, speed alone isn’t a competitive advantage — coherence is.

The organizations that win are the ones that connect their efforts into systems that reinforce each other, rather than scattering into isolated projects that look good in slides but break in reality.

Strong coordination ensures that every improvement compounds — onboarding gets smoother, workflows feel effortless, and the value of your data multiplies over time. Weak coordination guarantees the opposite: local wins that leave the end user unchanged.

AI raises the stakes. It rewards teams who treat system design as a first-class discipline — governing interfaces, closing feedback loops, and making a small number of high-leverage changes that drive the most downstream value.

But here’s the nuance most miss: system design is not just an architectural or operational challenge. It’s a design challenge. The way your teams model workflows, shape interfaces, and define affordances determines how the system behaves, how easy it is to extend, and how seamlessly AI can plug into it.

That’s where we’ll focus next: approaching system design in AI from the designer’s point of view — how to create the cues, constraints, and interactions that make a system both adaptable and coherent, even as AI reshapes the way work gets done.